Data Engineering Tools written in Rust

This blog post will provide an overview of the data engineering tools available in Rust, their advantages and benefits, as well as a discussion on why Rust is a great choice for data engineering.

Python is a popular language for data engineers, but it is not the most robust or secure. Data engineering is an essential part of modern software development, and Rust is an increasingly popular programming language for this task. Rust is an efficient language, providing fast computation and low-latency responses with a high level of safety and security. Additionally, it offers a unique set of features and capabilities that make it an ideal choice for data engineering ?!.

Rust also now offers more and more of libraries and frameworks for data engineering. These libraries provide a variety of features and tools, such as data analysis, data visualization, and machine learning, which can make data engineering easier and more efficient.

This blog post will provide an overview of the data engineering tools available in Rust, their advantages and benefits, as well as a discussion on why Rust is a great choice for data engineering.

Table of Contents

DataFusion

DataFusion, based on Apache Arrow, is an SQL query engine that provides the same functionality as Apache Spark and other similar query engines. It provides an efficient way to process data quickly and accurately, by leveraging the power of Arrow as its backbone. DataFusion offers a range of features that enable developers to build advanced applications that can query millions of records at once, as well as to quickly and easily process complex data. In addition, DataFusion provides support for a wide variety of data sources, allowing developers to access the data they need from anywhere.

Highlight features:

Feature-rich SQL support & DataFrame API.

Blazingly fast, vectorized, multi-threaded, streaming exec engine.

Native support for Parquet, CSV, JSON & Avro.

Extension points: user-defined functions, custom plan & exec nodes. Streaming, async. IO from object stores.

use datafusion::prelude::*;

#[tokio::main]

async fn main() -> datafusion::error::Result<()> {

// create the dataframe

let ctx = SessionContext::new();

let df = ctx.read_csv("tests/example.csv", CsvReadOptions::new()).await?;

let df = df.filter(col("a").lt_eq(col("b")))?

.aggregate(vec![col("a")], vec![min(col("b"))])?

.limit(0, Some(100))?;

// execute and print results

df.show().await?;

Ok(())

}+---+--------+

| a | MIN(b) |

+---+--------+

| 1 | 2 |

+---+--------+Polars

Polars is a blazingly fast DataFrames library implemented in Rust which takes advantage of the Apache Arrow Columnar Format for memory management. It’s a faster Pandas. You can see at the h2oai’s db-benchmark.

This format allows for high-performance data processing, allowing Polars to process data at an impressive speed. With the combination of Rust’s performance capabilities and the Apache Arrow Columnar Format, Polars is an ideal choice for data scientists looking for an efficient and powerful DataFrames library.

use polars::prelude::*;

let df = LazyCsvReader::new("reddit.csv")

.has_header(true)

.with_delimiter(b',')

.finish()?

.groupby([col("comment_karma")])

.agg([

col("name").n_unique().alias("unique_names"),

col("link_karma").max()

])

.fetch(100)?;Highlight features:

Lazy | eager execution

Multi-threaded

SIMD

Query optimization

Powerful expression API

Hybrid Streaming (larger than RAM datasets)

Rust | Python | NodeJS | …

Delta Lake Rust

Delta Lake provides a native interface in Rust that gives low-level access to Delta tables. This interface can be used with data processing frameworks such as datafusion, ballista, polars, vega, etc. Additionally, bindings to higher-level languages like Python and Ruby are also available.

Vector.dev

A high-performance observability data pipeline for pulling system data (logs, metadata).

Vector is an end-to-end observability data pipeline that puts you in control. It offers high-performance collection, transformation, and routing of your logs, metrics, and traces to any vendor you choose. Vector helps reduce costs, enrich data, and ensure data security. It is up to 10 times faster than other solutions and is open source.

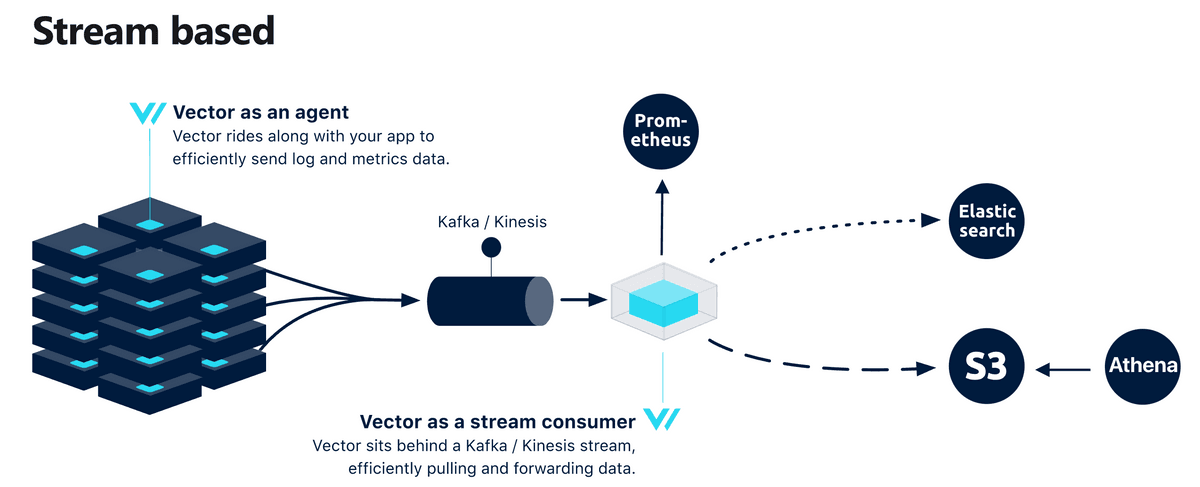

Vector can be deployed as many topologies to collect and forward data: Distributed, Centralized or Stream based.

To get started, follow our quickstart guide or install Vector.

Create a configuration file called vector.toml with the following information to help Vector understand how to collect, transform, and sink data.

[sources.generate_syslog]

type = "demo_logs"

format = "syslog"

count = 100

[transforms.remap_syslog]

inputs = [ "generate_syslog"]

type = "remap"

source = '''

structured = parse_syslog!(.message)

. = merge(., structured)

'''

[sinks.emit_syslog]

inputs = ["remap_syslog"]

type = "console"

encoding.codec = "json"Continue …

See the full article at my website: https://blog.duyet.net/2023/01/data-engineering-rust-tools.html